ByteByteGo Top Coding Interview Problems

The coding problems featured in the book Coding Interview Patterns by ByteByteGo are also available on their official webpage.

For convenience, I have compiled the list of these problems in CSV format at the bottom of this page. Additionally, I have included the corresponding LeetCode links, problem IDs, and titles, where applicable. (The CSV format can be easily converted into an Excel or Numbers document for further use.)

The problem list

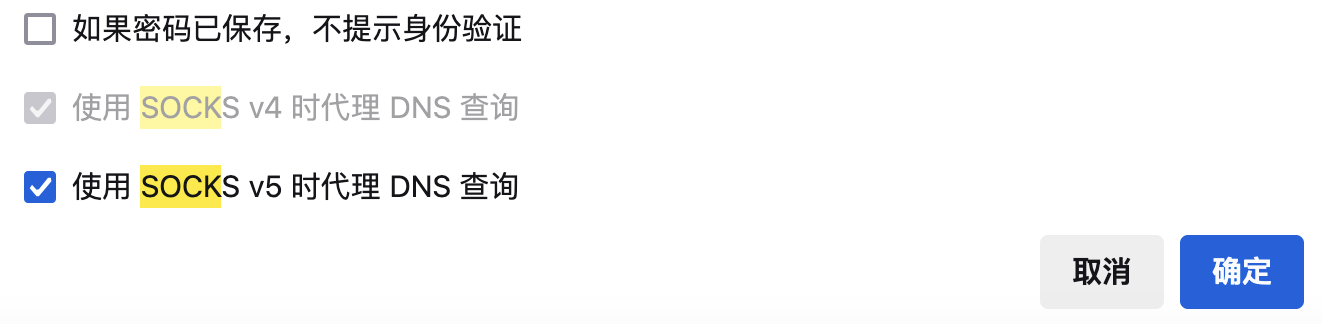

Enable "Proxy DNS when using SOCKS5" in Firefox

When using a SOCKS proxy in Firefox, enabling ‘Proxy DNS when using SOCKS v5’ under Network Settings can help resolve some network issues. Without this option enabled, Firefox may fail to connect to sites like google.com when accessed from mainland China, likely due to DNS pollution.

在Firefox里配置SOCKS代理时,记得钩上“使用SOCKS v5时代理DNS查询”。否则,Firefox在访问google.com等网站时很有可能失败, 特别是从中国大陆访问这些站点时。

Sharing SSH Tunnels Across a Local Network

Run below command on machine A. It starts a SOCKS server listening on the port 8080, and forwards all traffic to that SOCKS server to the remote host, over the secure channel.

# ssh -C -D :8080 -N <username>@<remote-host>

ssh -C -D :8080 -N test_user@1.2.3.4

Note the value of the -D option, :8080, which is prefixed with a :.

-D :8080 means the listening port is bound to all interfaces of machine A, not just to

localhost.

Other machines in the same local network now can access that SOCKS proxy using the IP address of machine A.

Also configure the network settings on machine A to use a fixed IP address, so that other machines don’t have to update the SOCKS proxy IP often. (Choose “Using DHCP with manual address” in MacOS Network settings.)

To use the SOCKS proxy for commands in Terminal, set http_proxy and https_proxy.

Like,

export http_proxy=socks5://192.168.31.100:8080 https_proxy=socks5://192.168.31.100:8080

This is a workaround to fix the broken SSH tunneling after I upgraded my Mac to MacOS 15.0 Sequoia.

LeetCode 91 Decode Ways Java Solution

The Problem

A message containing letters from A-Z can be encoded into numbers using the following mapping:

'A' -> "1"

'B' -> "2"

...

'Z' -> "26"

To decode an encoded message, all the digits must be grouped then mapped back into letters using the reverse of the mapping above (there may be multiple ways). For example, "11106" can be mapped into:

"AAJF"with the grouping(1 1 10 6)"KJF"with the grouping(11 10 6)

Note that the grouping (1 11 06) is invalid because "06" cannot be mapped into 'F' since "6" is different from "06".

Given a string s containing only digits, return the number of ways to decode it.

Check LeetCode link for more details and example input & output.

A Recursive Solution

Below is a straightforward recursive solution.

public int numDecodings(String s) {

return numberOfDecodingStartAt(s, 0);

}

int numberOfDecodingStartAt(String s, int index) {

if (index == s.length()) {

return 1;

}

int result = 0;

if (isChar(s, index, index + 1)) {

result += numberOfDecodingStartAt(s, index + 1);

}

if (index + 2 <= s.length() && isChar(s, index, index + 2)) {

result += numberOfDecodingStartAt(s, index + 2);

}

return result;

}

boolean isChar(String s, int start, int end) {

// "06" cannot be mapped into 'F' since "6" is different from "06".

if (s.charAt(start) == '0') {

return false;

}

int code = Integer.parseInt(s.substring(start, end));

return code > 0 && code < 27; // 1 - 26

}

Dynamic Programming Solutions

A Top Down Approach

Improve the performance by caching the results of sub problems.

LeetCode 213 House Robber II Java Solution

The Problem

You are a professional robber planning to rob houses along a street. Each house has a certain amount of money stashed. All houses at this place are arranged in a circle. That means the first house is the neighbor of the last one. Meanwhile, adjacent houses have a security system connected, and it will automatically contact the police if two adjacent houses were broken into on the same night.

Given an integer array nums representing the amount of money of each house, return the maximum amount of money you can rob tonight without alerting the police.

Check LeetCode link for more details and example input & output.

A Dynamic Programming Solution

It’s not allowed to rob both house 0 and house nums.length - 1, because they are adjacent hourses.

So the problem becomes finding the greater amount between robbing houses except the first and

robbing house except the last.

The two sub problems, robbing houses except the first and robbing house except the last, can be solved using a bottom up approach described in this post.

A Java solution is listed below.

public int rob(int[] nums) {

if (nums.length == 1) { // an edge case

return nums[0];

}

return Math.max(rob1(nums, 0, nums.length - 2), rob1(nums, 1, nums.length - 1));

}

/*

This method is to solve the 'house robber I' problem.

Houses are located in `nums`, from `start` to `end`.

*/

int rob1(int[] nums, int start, int end) {

int r1 = nums[end];

int r2 = 0;

for (int i = end - 1; i >= start; i--) {

int tmp = r1;

r1 = Math.max(nums[i] + r2, r1);

r2 = tmp;

}

return r1;

}

The time complexity is O(n).

A Recursive Approach

Below is a straightforward recursive solution.

LeetCode 300 Longest Increasing Subsequence Java Solution

The Problem

Given an integer array nums, return the length of the longest strictly increasing subsequence.

Check LeetCode link for more details and example input & output.

Dynamic Programming Solutions

A Recursive Approach

Comparing with the original problem, it’s easier to solve the longest increasing subsequence

which starts at the index i recursively. Let’s call it LIS[i], longest increasing subsequence

of nums starting at the index i.

The original problem becomes finding the maximum value among LIS[0], LIS[1], …, LIS[nums.length - 1].

public int lengthOfLIS(int[] nums) {

int max = 1;

for (int i = 0; i < nums.length; i++) {

max = Math.max(max, lengthOfLISStartAtIndex(nums, i));

}

return max;

}

int lengthOfLISStartAtIndex(int[] nums, int index) {

if (index == nums.length - 1) { // the base case

return 1;

}

int result = 1;

for (int i = index + 1; i < nums.length; i++) {

// this test guarantees getting an increasing sub-sequence

if (nums[i] > nums[index]) {

result = Math.max(result, 1 + lengthOfLISStartAtIndex(nums, i));

}

}

return result;

}

A Top Down Approach

Improve the recursive solution with caching.

public int lengthOfLIS(int[] nums) {

Map<Integer, Integer> cache = new HashMap<>();

int result = 1;

for (int i = 0; i < nums.length; i++) {

result = Math.max(result, lengthOfLIS(nums, i, cache));

}

return result;

}

// length Of LIS starting at `index`

int lengthOfLIS(int[] nums, int index, Map<Integer, Integer> cache) {

if (index == nums.length - 1) {

return 1;

}

if (cache.get(index) != null) {

return cache.get(index);

}

int result = 1;

for (int i = index + 1; i < nums.length; i++) {

if (nums[i] > nums[index]) {

result = Math.max(result, 1 + lengthOfLIS(nums, i, cache));

}

}

cache.put(index, result);

return result;

}

A Bottom Up Approach

It’s easy to calculate LIS[i] using a bottom up approach too.

LIS[i] is the maximum of below values.

- 1 (since at least

nums[i]is a candidate of LIS starting ati) 1 + LIS[i+1], ifnums[i]<nums[i+1]1 + LIS[i+2], ifnums[i]<nums[i+2]- …

Base case is LIS[nums.length - 1] is 1.

Below is a Java solution.

LeetCode 139 Word Break Java Solution

The Problem

Given a string s and a dictionary of strings wordDict, return true if s can be segmented into a space-separated sequence of one or more dictionary words.

Note that the same word in the dictionary may be reused multiple times in the segmentation.

Check LeetCode link for more details and example input & output.

Dynamic Programming Solutions

A Top Down Approach

public boolean wordBreak(String s, List<String> wordDict) {

return wordBreak(s, 0, wordDict, new Boolean[s.length()]);

}

boolean wordBreak(String s, int index, List<String> wordDict, Boolean[] dp) {

if (index == s.length()) {

return true;

} else if (index > s.length()) {

return false;

}

if (dp[index] != null) {

return dp[index];

}

for (String word: wordDict) {

if (index + word.length() > s.length()

|| !s.substring(index, index + word.length()).equals(word)) {

continue;

}

if (wordBreak(s, index + word.length(), wordDict, dp)) {

dp[index] = true;

return true;

}

}

dp[index] = false;

return false;

}

A Bottom Up Approach

dp[i] is a sub problem, whose input is a sub string of s, starting at the index i.

dp[i] is true, if there is at least one word w in wordDict, that makes dp[i + len(w)] true.

The base case is that dp[len(s)] is true. And, dp[0] is the original problem.

Below is a Java solution.

LeetCode 62 Unique Paths Java Solution

The Problem

There is a robot on an m x n grid. The robot is initially located at the top-left corner (i.e., grid[0][0]). The robot tries to move to the bottom-right corner (i.e., grid[m - 1][n - 1]). The robot can only move either down or right at any point in time.

Given the two integers m and n, return the number of possible unique paths that the robot can take to reach the bottom-right corner.

Check LeetCode link for more details and example input & output.

Dynamic Programming Solutions

Solve it use the bottom up approach.

dp(m, n) = dp(m, n - 1) + dp(m - 1, n)

dp(m, n) is the problem with a m x n grid as the input.

The answer of dp(m, n) is the sum of dp(m, n - 1) and dp(m - 1, n),

where dp(m, n - 1) means the robot chooses to move right and dp(m - 1, n)

means the robot chooses to move down.

Base cases:

dp(i, 1)is1. That is if the grid is a one-column grid, the robot only has one choice, which is going down.dp(1, i)is1too. On a one-row grid, the robot can only go right.

The sub problem dp(i, j) has two dimensions, so it’s a 2D dynamic programming problem.

We can use a matrix, i.e. a 2d array.

public int uniquePaths(int m, int n) {

// dp[i][j] is the answer for dp(i+1, j+1)

int[][] dp = new int[m][n];

// base case: dp(1, i) = 1

for (int i = 0; i < n; i++) {

dp[0][i] = 1;

}

// base case: dp(i, 1) = 1

for (int i = 0; i < m; i++) {

dp[i][0] = 1;

}

for (int i = 1; i < m; i++) {

for (int j = 1; j < n; j++) {

dp[i][j] = dp[i - 1][j] + dp[i][j - 1];

}

}

return dp[m - 1][n - 1];

}

Actually, when calculate dp[i][j], we only need the “previous” row in

the matrix, i.e. dp[i - 1][j]. So we can save some space, by using only two rows.

See Java code below.

LeetCode 55 Jump Game Java Solution

The Problem

You are given an integer array nums. You are initially positioned at the array’s first index, and each element in the array represents your maximum jump length at that position.

Return true if you can reach the last index, or false otherwise.

Check LeetCode link for more details and example input & output.

Dynamic Programming Solutions

A Top Down Approach

A Java solution.

public boolean canJump(int[] nums) {

return canJump(nums, 0, new Boolean[nums.length]);

}

/*

starting at position `index`, return `true` if it can reach the end.

*/

boolean canJump(int[] nums, int index, Boolean[] dp) {

// if we reach the end, i.e. the last index

if (index == nums.length - 1) {

dp[index] = true;

return true;

}

// check the cache

if (dp[index] != null) {

return dp[index];

}

// try all possible jumps from the position of the `index`

for (int i = 1; i <= nums[index]; i++) {

if (canJump(nums, index + i, dp)) {

dp[index] = true;

return true;

}

}

// tried all possible jumps and unable to reach the end,

// therefore, return false when getting here

dp[index] = false;

return false;

}

A Bottom Up Approach

LeetCode 322 Coin Change Java Solution

The Problem

You are given an integer array coins representing coins of different denominations and an integer amount representing a total amount of money.

Return the fewest number of coins that you need to make up that amount. If that amount of money cannot be made up by any combination of the coins, return -1.

You may assume that you have an infinite number of each kind of coin.

Check LeetCode link for more details and example input & output.

Dynamic Programming Solutions

A Top Down Approach

A Java solution.

public int coinChange(int[] coins, int amount) {

return coinChange(coins, amount, new HashMap<>());

}

int coinChange(int[] coins, int amount, Map<Integer, Integer> dp) {

if (amount < 0) {

return -1;

}

if (amount == 0) { // a base case & edge case

return 0;

}

if (dp.get(amount) != null) {

return dp.get(amount);

}

int min = amount + 1; // ①

for (int i = 0; i < coins.length; i++) {

int changes = 1 + coinChange(coins, amount - coins[i], dp);

// `changes == 0` meaning the above `coinChange` call return `-1`,

// no need to update `min` in this case

if (changes != 0 && changes < min) {

min = changes;

}

}

// if `min` stays at its initial value, it means no answer for input `amount`

if (min == amount + 1) {

min = -1;

}

dp.put(amount, min);

return min;

}

For initial value of min, (line ①), amount + 1 is big enough.

Because even if coin 1 exists, the maximum value of changes is amount.